CONTENT MODERATION SERVICES

What is Content Moderation?

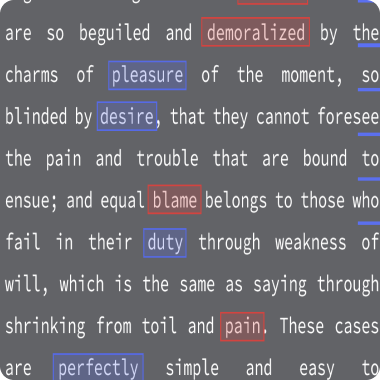

What we Help Identify?

Our content moderation solutions offer the capability to detect various forms of content that might breach platform regulations or community norms. Among the typical content categories we can pinpoint are:

Types of Content Moderation Services

Training Data provides various types of image annotation services, each designed to address specific needs and requirements. Here are some common types of image annotation services:

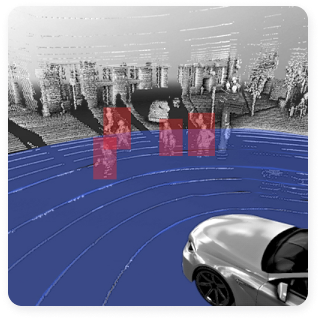

Content Moderation Use Cases

Social Media Platforms

Content moderation on platforms like Facebook, Twitter, and Instagram involves filtering out hate speech, bullying, graphic violence, and other harmful content to create a safer online environment.

Gaming Industry

Platforms like Amazon and eBay rely on content moderation to ensure that product listings are accurate, comply with regulations, and do not contain prohibited items or misleading information.

E-comerce

Online gaming platforms such as Twitch, Steam, and Discord involves monitoring chat conversations, user interactions, and in-game content to prevent harassment, cheating, and inappropriate behavior.

News Media and Publishing

News portals and publishing platforms use moderation services for user comments to prevent the dissemination of misinformation, hate speech, and abusive behavior.

Healthcare

Moderating user interactions between healthcare providers and patients helps maintain professionalism, confidentiality, and ethical standards in telemedicine consultations. It also ensures the privacy and security of patient information, complying with healthcare regulations such as HIPAA, and preventing the spread of medical misinformation.

Communities & Forums

Content moderation in online communities and forums involves enforcing community guidelines to promote respectful discussions and prevent harassment, trolling, and flame wars.

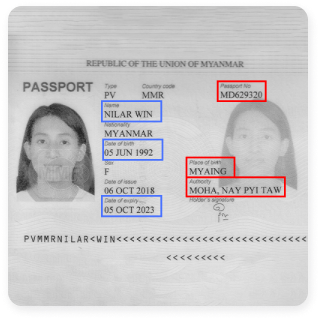

Dating Websites

Content moderation on dating platforms involves verifying user profiles and photos to prevent catfishing, impersonation, and fraudulent activities. Eager to detect and remove inappropriate behavior, such as harassment, explicit content, and solicitation.

Children Websites

Content moderation in children's websites and apps focuses on filtering out inappropriate content such as violence, nudity, explicit language, and mature themes.

Advertising Networks

Services involve reviewing ad creatives and landing pages to ensure compliance with advertising policies, industry standards, and legal regulations. It helps assess ad placements to prevent ads from appearing alongside harmful or inappropriate content, thereby safeguarding brand reputation and integrity.

Language We Support for Content Moderation

German

Italian

Dutch

Danich

French

Arabic

Norwegian

Polish

Portuguese

Romanian

Russian

Spanish

Swedish

Turkish

Case Studies

Stages of work

-

Application

/01Leave a request on the website for a free consultation with an expert. Th e acco unt manager will guide you on the services, timelines, and price -

Free pilot

/02We will conduct a test pilot project for you and provide a golden set, based on which we will determine the final technical requirements and approve project metrics -

Agreement

/03We prepare a contract and all necessary documentation upon the request of your accountants and lawyers -

Workflow customization

/04We form a pool of suitable tools and assign an experienced manager who will be in touch with you regarding all project details -

Quality control

/05Data uploads for verification are done iteratively, allowing your team to review and approve collected/annotated data -

Post-payment

/06You pay for the work after receiving the data in agreed quality and quantity

Timeline

-

24 hoursApplication

-

24 hoursConsultation

-

1 to 3 daysPilot

-

1 to 5 daysConducting a pilot

-

1 day to several yearsCarrying out work on the project

-

1 to 5 daysQuality control

in the established quality and quantity

Why

Training Data

- Quality Assurance:

-

Enhanced Data Accuracy

-

Consistency in Labels

-

Reliable Ground Truth

-

Mitigation of Annotation Biases

-

Cost and Time Efficiency

- Data Security and Confidentiality:

-

GDPR Compliance

-

Non-disclosure agreement

-

Data Encryption

-

Multiple data storage options

-

Access Controls and Authentication

- Expert Team:

-

6 years in industry

-

35 top project managers

-

40+ languages

-

100+ countries

-

250k+ assessors

- Flexible and Scalable Solutions:

-

24/7 availability of customer service

-

100% post payment

-

$550 minimum check

-

Variable Workload

-

Customized Solutions